Introduction

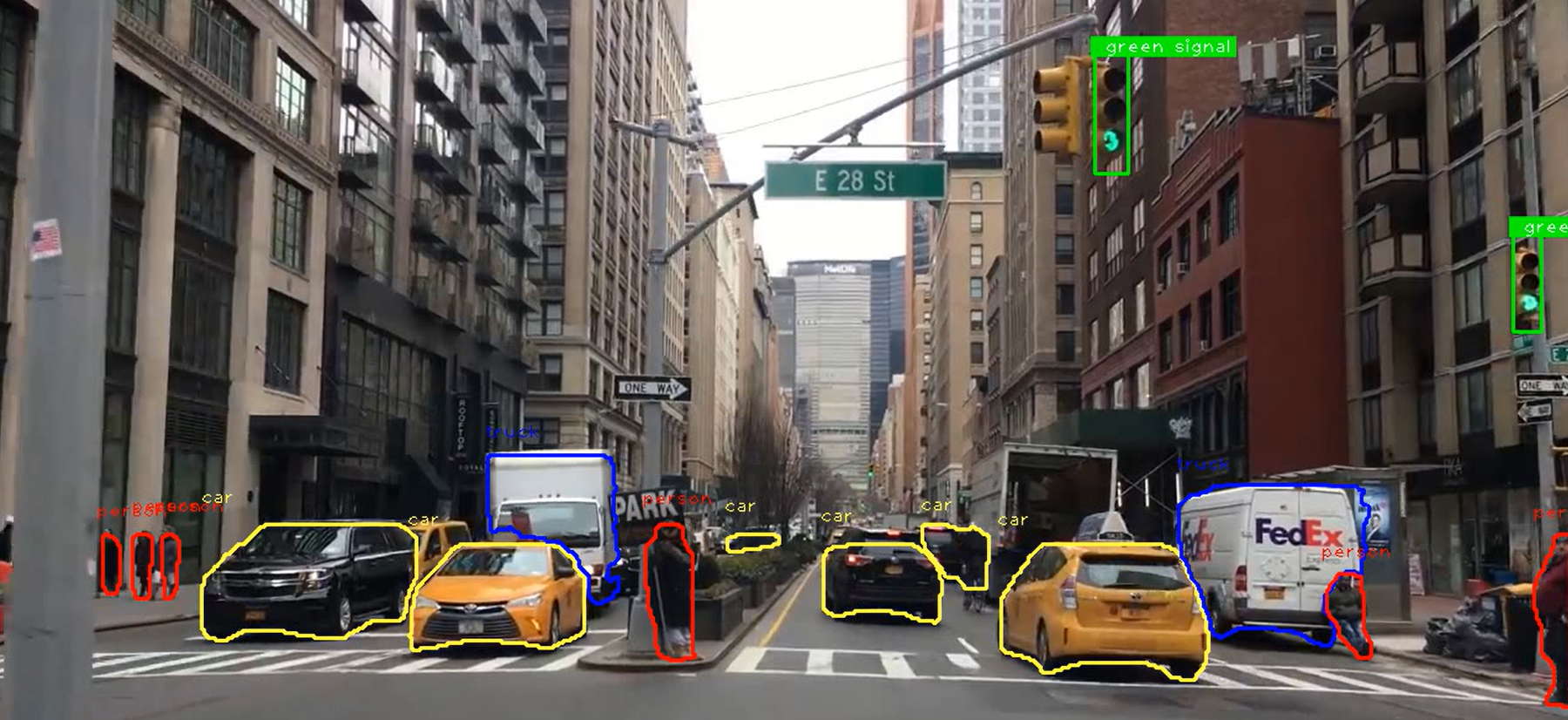

The objective of this project is to perform live segmentation of the road by utilizing a camera mounted on the vehicle to enable autonomous navigation. The system will incorporate live segmentation techniques, employing the YOLO (You Only Look Once) algorithm, to identify road elements such as cars and pedestrians in real-time.

Additionally, the project aims to train a custom deep learning model from scratch to accurately detect and classify traffic signals based on their colors: green, red, and yellow.

Overview

a) Camera Input :

The system will utilize a camera mounted on the car to capture real-time video frames of the road and surroundings. These video frames will serve as the primary input for subsequent processing stages.

b) Live Segmentation using YOLO:

The YOLO algorithm, which provides real-time object detection and segmentation, will be employed to identify and classify road elements. Specifically, the model will detect and segment cars and pedestrians in the video frames, enabling the self-driving car to perceive its environment accurately.

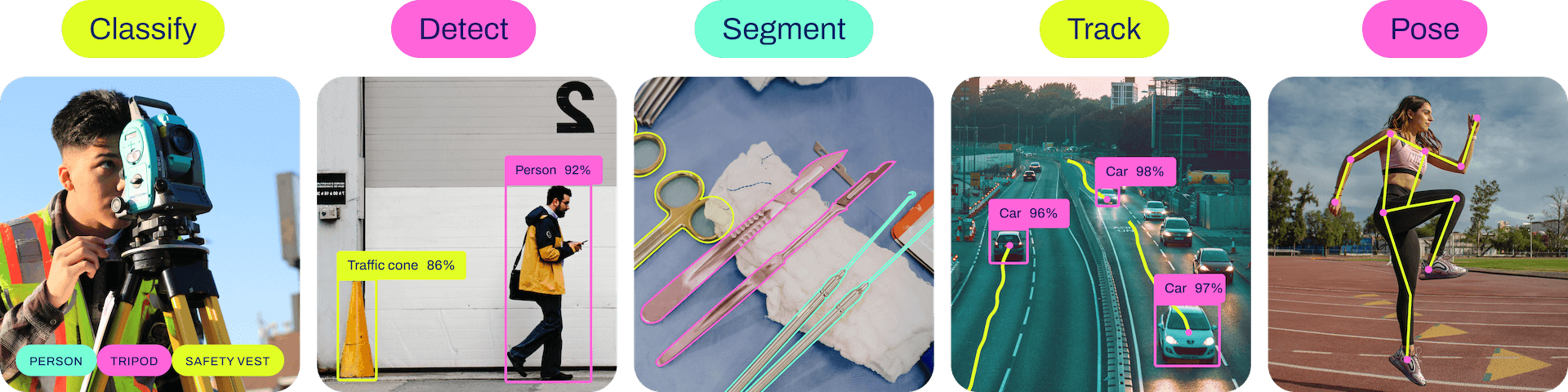

Ultralytics YOLOv8 is a cutting-edge, state-of-the-art (SOTA) model that builds upon the success of previous YOLO versions and introduces new features and improvements to further boost performance and flexibility. YOLOv8 is designed to be fast, accurate, and easy to use, making it an excellent choice for a wide range of object detection and tracking, instance segmentation, image classification and pose estimation tasks.

To more information visit: https://github.com/ultralytics/ultralytics

YOLOv8 Detect, Segment and Pose models pretrained on the COCO dataset are available here, as well as YOLOv8 Classify models pretrained on the ImageNet dataset. Track mode is available for all Detect, Segment and Pose models.

c) Traffic Signal Detection:

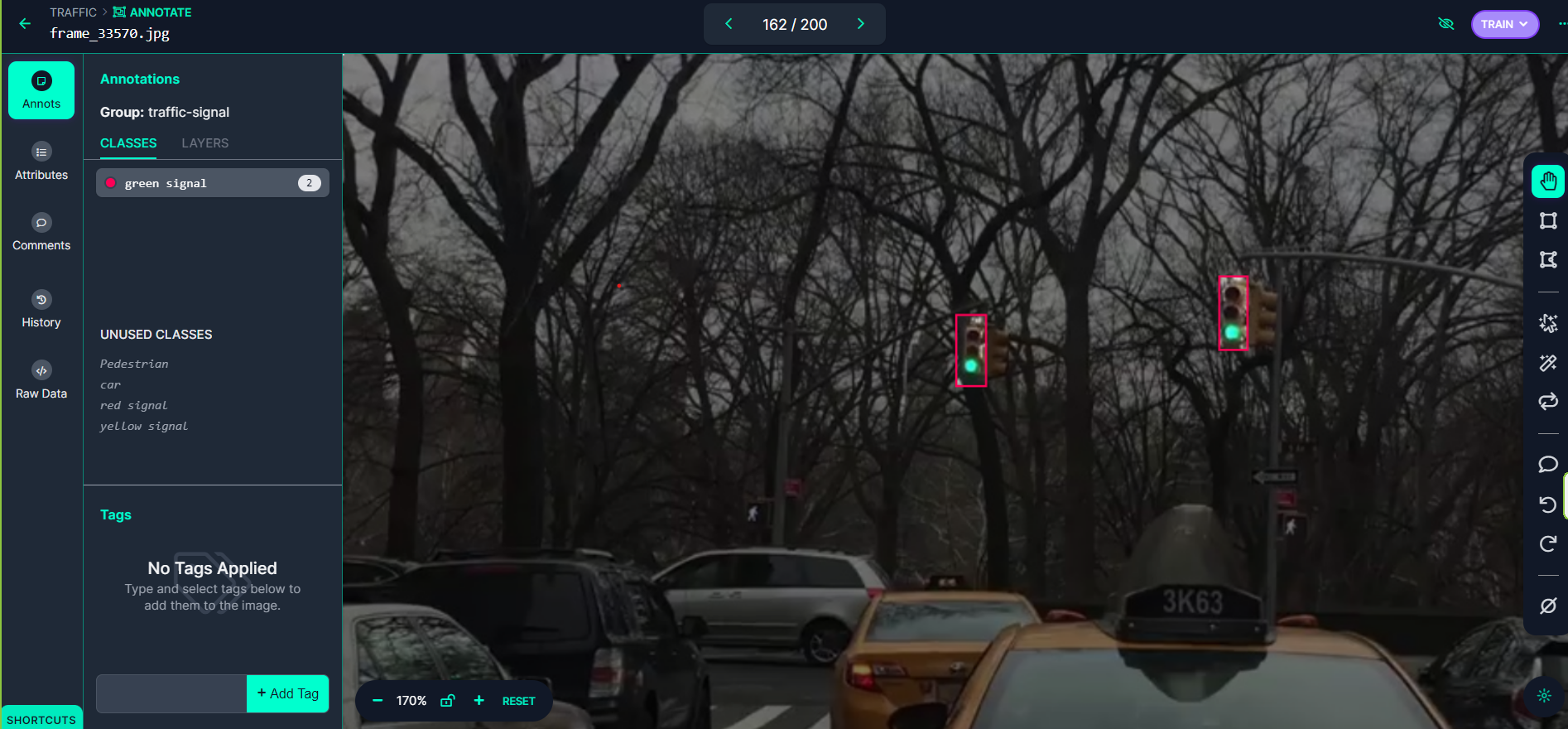

To detect and classify traffic signals based on their colors, a custom deep learning model will be trained from scratch. This model will be responsible for analyzing the segmented regions of the video frames and accurately identifying green, red, and yellow traffic signals.

The YOLOv8 version has been trained to detect only 80 classes that do not include the colors of traffic signals. In order to achieve our goal of detecting traffic signals, we need to train a custom model specifically for traffic signal detection. This will require a separate dataset of labeled images consisting of traffic signal images captured from various angles, distances, and lighting conditions. The model will then be trained to accurately identify and classify the colors of traffic signals (green, red, and yellow).

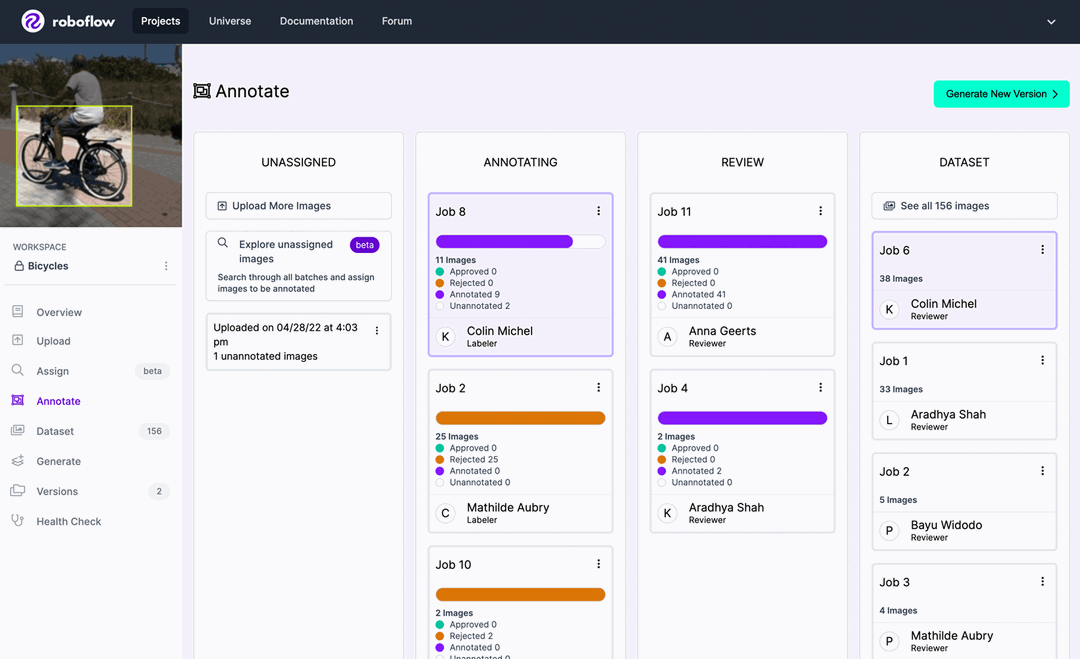

1- Annotation

To accomplish this, we can utilize Roboflow to extract images from videos, annotate these images, and apply various augmentation options. The good news is that Roboflow offers these functionalities for free, allowing us to streamline the data preparation process for training our custom traffic signal detection model.

Here an example:

2- Training the model

Google Collab is a powerful platform that provides free access to GPUs, making it an excellent choice for training machine learning models. We can leverage this platform to train our custom model for detecting various traffic signals.

3- Testing the model

We tested our detection model on a video, and it successfully identified and tracked traffic signals with precision. This validation highlights its potential for applications in autonomous driving, traffic management, and road safety, inspiring us to continue refining and optimizing the model for real-world implementation.